from IPython.core.display import display, HTML

display(HTML("<style>.container { width:100% !important; }</style>"))

Deep Learning with Tensorflow

(IST Austria)

~

Lecture 1, Nov 28, 2017

import socket

print("Running on computer: ", socket.gethostname())

Running on computer: gpu62

# To support both python 2 and python 3

from __future__ import division, print_function, unicode_literals

# Common imports

import tensorflow as tf

import numpy as np

import os

# to make this notebook's output stable across runs

def reset_graph(seed=42):

tf.reset_default_graph()

tf.set_random_seed(seed)

np.random.seed(seed)

from IPython.display import clear_output, Image, display, HTML

def strip_consts(graph_def, max_const_size=32):

"""Strip large constant values from graph_def."""

strip_def = tf.GraphDef()

for n0 in graph_def.node:

n = strip_def.node.add()

n.MergeFrom(n0)

if n.op == 'Const':

tensor = n.attr['value'].tensor

size = len(tensor.tensor_content)

if size > max_const_size:

tensor.tensor_content = b"<stripped %d bytes>"%size

return strip_def

def show_graph(graph_def, max_const_size=32):

"""Visualize TensorFlow graph."""

if hasattr(graph_def, 'as_graph_def'):

graph_def = graph_def.as_graph_def()

strip_def = strip_consts(graph_def, max_const_size=max_const_size)

code = """

<script>

function load() {{

document.getElementById("{id}").pbtxt = {data};

}}

</script>

<link rel="import" href="https://tensorboard.appspot.com/tf-graph-basic.build.html" onload=load()>

<div style="height:600px">

<tf-graph-basic id="{id}"></tf-graph-basic>

</div>

""".format(data=repr(str(strip_def)), id='graph'+str(np.random.rand()))

iframe = """

<iframe seamless style="width:800px;height:620px;border:1" srcdoc="{}"></iframe>

""".format(code.replace('"', '"'))

display(HTML(iframe))

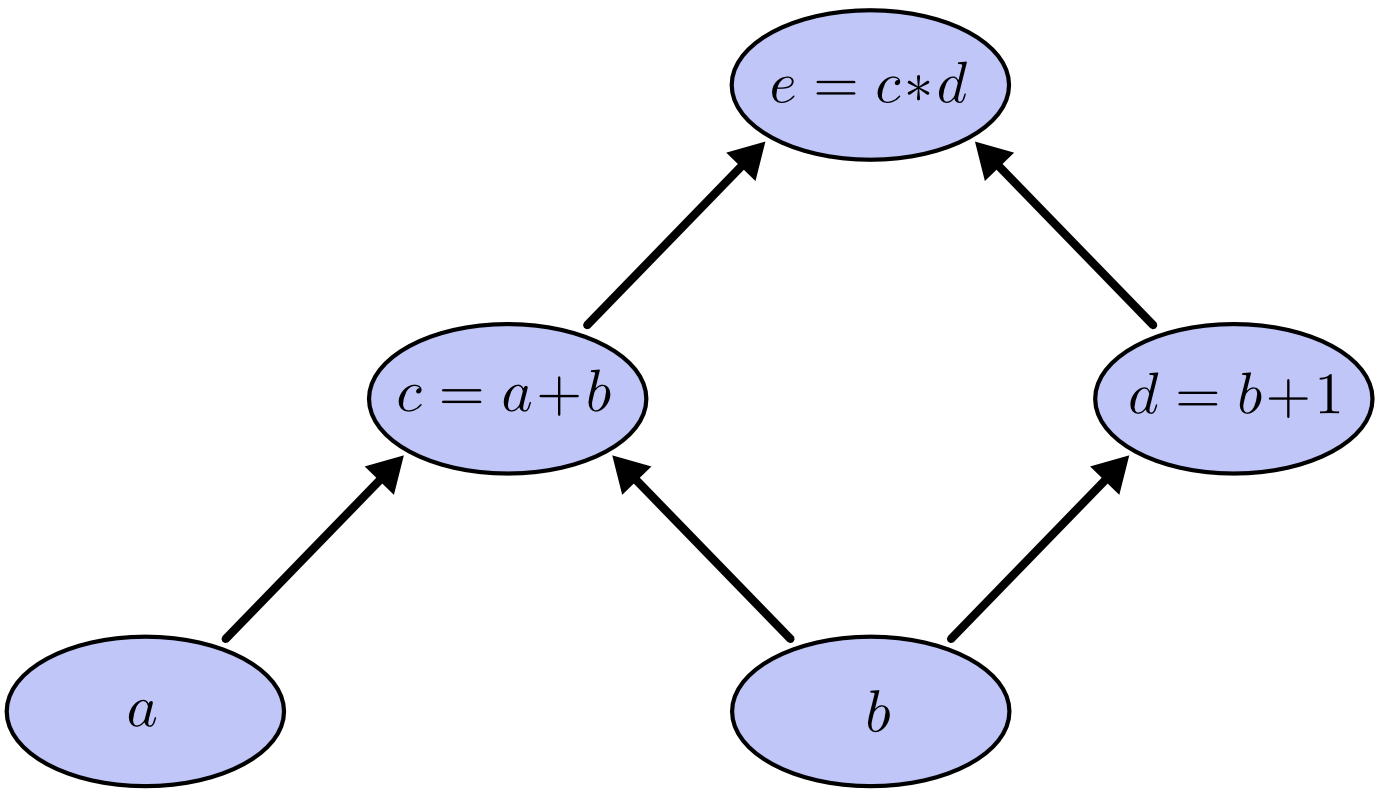

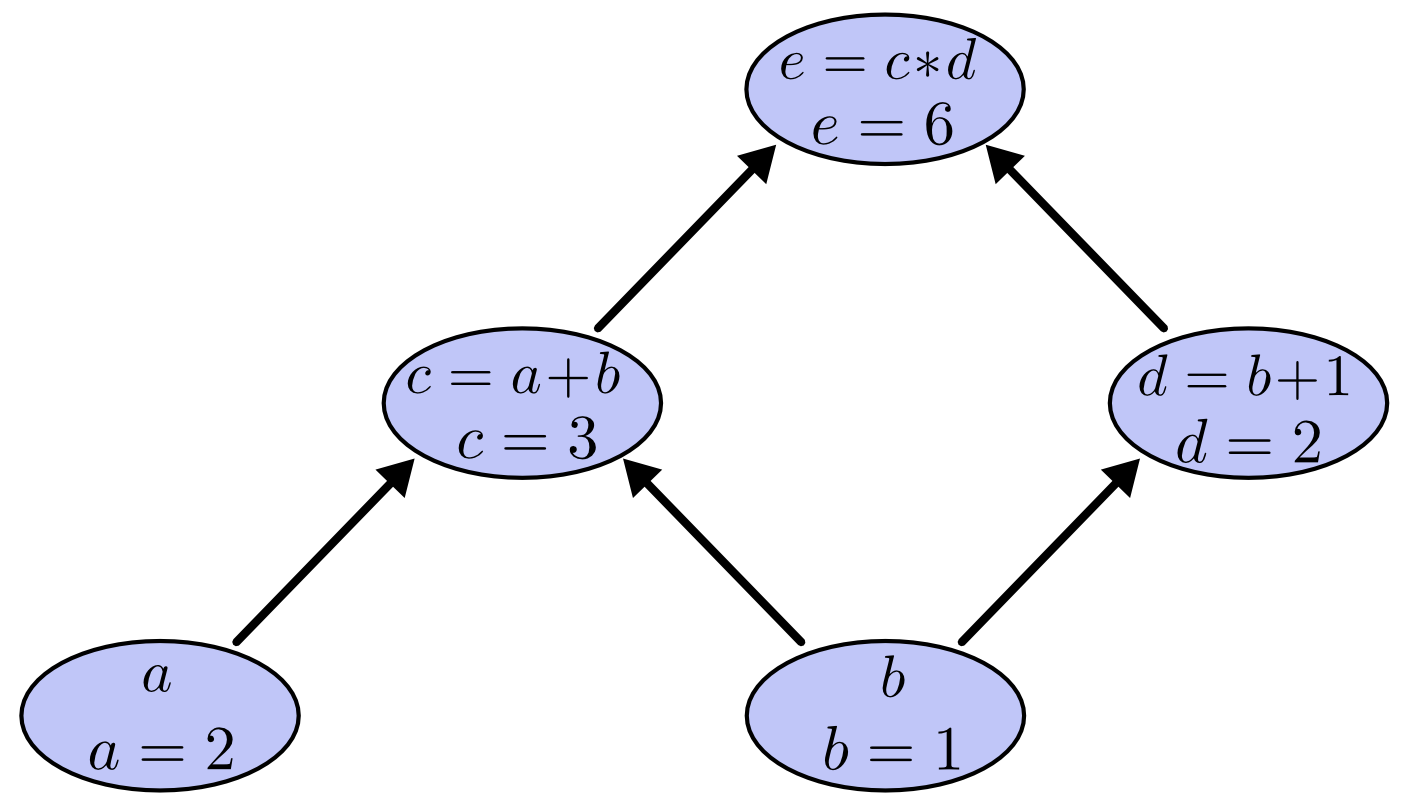

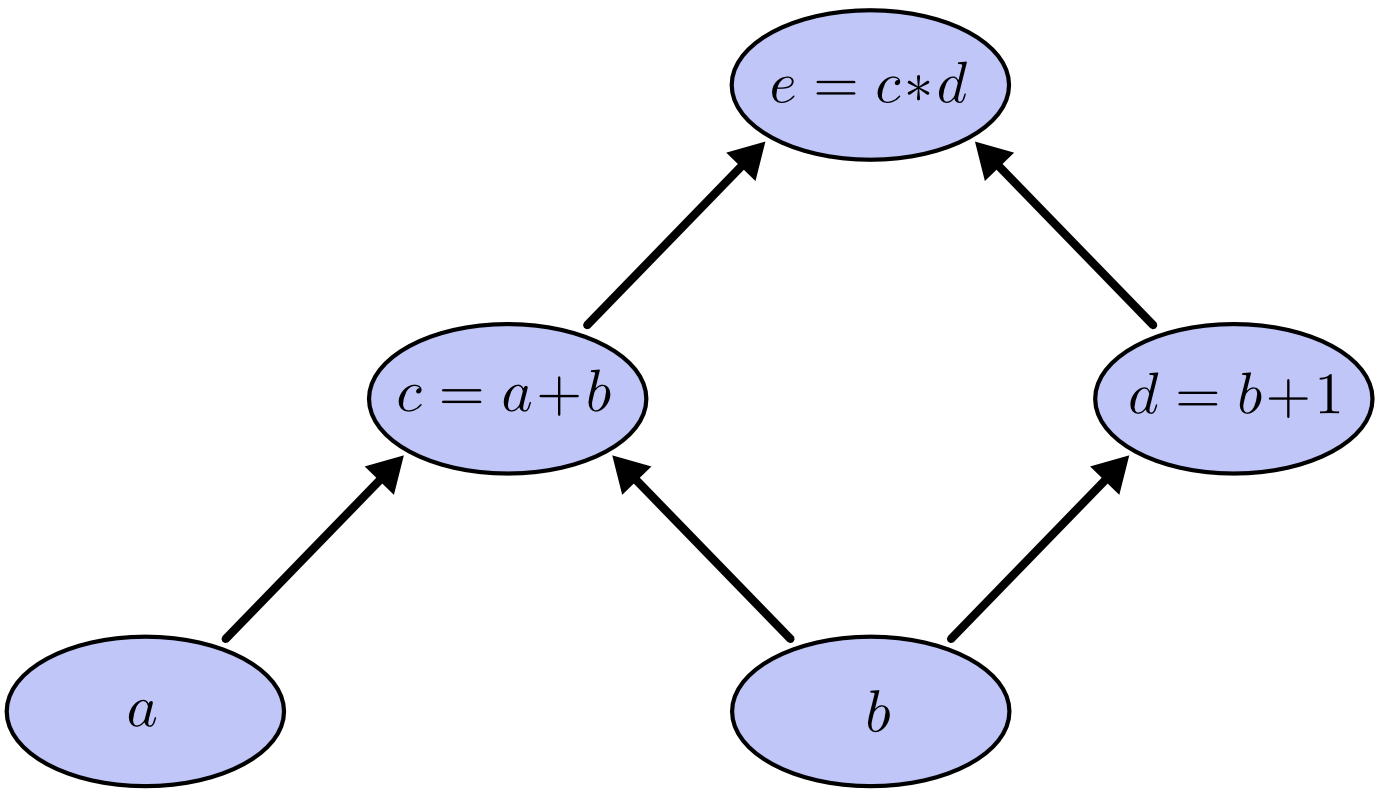

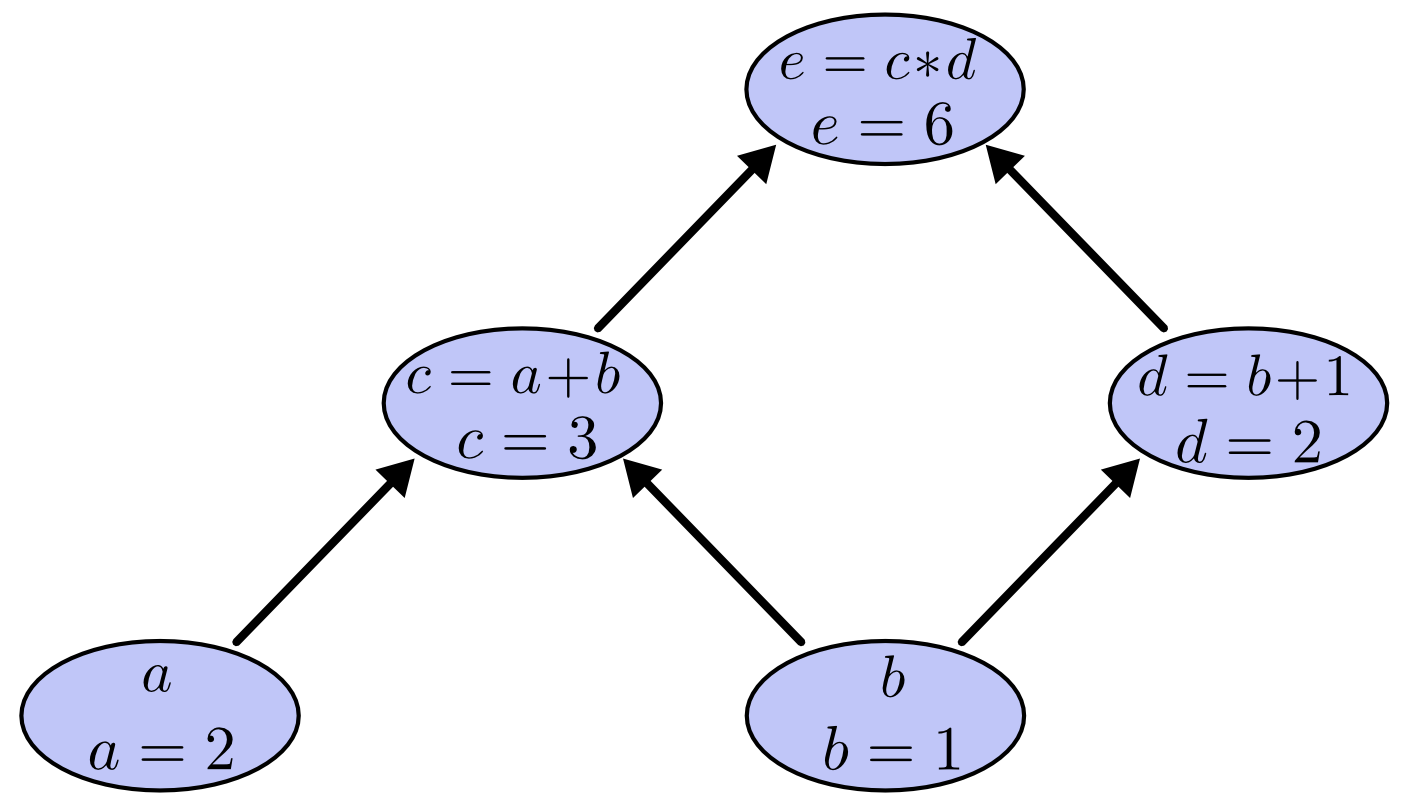

Computation Graphs¶

How to compute?

$$(a+b)*(b+1)$$

The formula gives us the instructions how to compute:

add $a$ and $b$ (let's call the intermediate result $c$)

add $b$ and $1$ (let's call the intermediate result $d$)

multiply $c$ and $d$ (let's call the result $e$)

To actually execute instructions, we need to povide values for the placeholders $a$ and $b$.

Computation graph describes the schematics of the computation

Computation graph describes the schematics of the computation

A node can be evaluated if it's parents have values (if necessary, after evaluating the parents)

A node can be evaluated if it's parents have values (if necessary, after evaluating the parents)

In Python, executation happens immediately when the commands occur

reset_graph()

try:

del a,b

except NameError:

pass

#/usr/bin/python

(a+b)*(b+1)

NameErrorTraceback (most recent call last) <ipython-input-226-171fa12eb6c2> in <module>() 1 #/usr/bin/python ----> 2 (a+b)*(b+1) NameError: name 'a' is not defined

#/usr/bin/python

a = 2 # provide values for placeholders 'a' and 'b'

b = 1

(a+b)*(b+1) # now it works

6

reset_graph()

del a,b

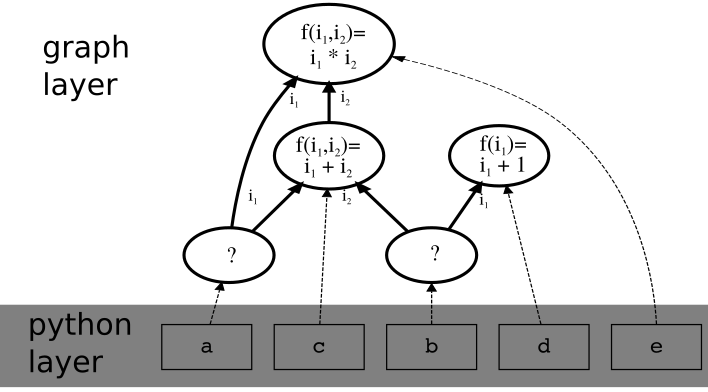

In Tensorflow, building the graph and executing it are two separate steps:

- build a graph

- evaluate (parts of) it once or multiple times

# load tensorflow

import tensorflow as tf

# build a computation graph

a = tf.placeholder(tf.float32, name="a")

b = tf.placeholder(tf.float32, name="b")

y = tf.constant(1., name="one")

c = tf.add(a, b, name="c")

d = tf.add(b, y, name="d")

e = tf.multiply(c, d, name="e")

print(a)

print(b)

print(y)

print(c)

print(d)

print(e)

Tensor("a:0", dtype=float32)

Tensor("b:0", dtype=float32)

Tensor("one:0", shape=(), dtype=float32)

Tensor("c:0", dtype=float32)

Tensor("d:0", dtype=float32)

Tensor("e:0", dtype=float32)

show_graph(tf.get_default_graph())

Two abstraction layers, important to keep apart: 1. tensorflow graph, 2. python objects(=variables)

Two abstraction layers, important to keep apart: 1. tensorflow graph, 2. python objects(=variables)

reset_graph()

# tensorflow: build a computation graph

a = tf.placeholder(tf.float32)

b = tf.placeholder(tf.float32)

c = a+b # convenient shorthand notation.

d = b+1 # note: operations on graph nodes almost

e = c*d # always create new nodes in the graph

print(a) # a is a python variable, it's value is a node in tensorflow's graph

print(b) # b is a python variable, it's value is a node in tensorflow's graph

print(c) # c is a python variable, etc.

print(d)

print(e)

Tensor("Placeholder:0", dtype=float32)

Tensor("Placeholder_1:0", dtype=float32)

Tensor("add:0", dtype=float32)

Tensor("add_1:0", dtype=float32)

Tensor("mul:0", dtype=float32)

show_graph(tf.get_default_graph())

reset_graph()

del a,b,c,d,e

# tensorflow: build a computation graph

a = tf.placeholder(tf.float32)

b = tf.placeholder(tf.float32)

e = (a+b)*(b+1) # creates multiple nodes in the graph, one per operation

print(a) # a is a python variable, it's value is a node in tensorflow's graph

print(b) # b is a python variable, it's value is a node in tensorflow's graph

# intermediate results have graph nodes, but no python variable pointing to them

print(e)

Tensor("Placeholder:0", dtype=float32)

Tensor("Placeholder_1:0", dtype=float32)

Tensor("mul:0", dtype=float32)

show_graph(tf.get_default_graph())

reset_graph()

# graph names can be arbitrary, independent of python

a = tf.placeholder(tf.float32, name="spam")

b = tf.placeholder(tf.float32, name="ham")

e = tf.multiply(a+b,b+1, name="eggs") # creates multiple nodes, one per operation

print(a)

print(b)

print(e)

Tensor("spam:0", dtype=float32)

Tensor("ham:0", dtype=float32)

Tensor("eggs:0", dtype=float32)

show_graph(tf.get_default_graph())

reset_graph()

del a,b,e

# python names can be arbitrary, independent of graph

spam = tf.placeholder(tf.float32)

ham = tf.placeholder(tf.float32)

eggs = (spam+ham)*(ham+1)

print(spam)

print(ham)

print(eggs)

Tensor("Placeholder:0", dtype=float32)

Tensor("Placeholder_1:0", dtype=float32)

Tensor("mul:0", dtype=float32)

show_graph(tf.get_default_graph())

reset_graph()

del spam,ham,eggs

# tensorflow

import tensorflow as tf

a = tf.placeholder(tf.float32, name="a") # duplicate node names are

b = tf.placeholder(tf.float32, name="a") # automatically de-duplicated

e = tf.multiply(a+b,b+1, name="a")

print(a)

print(b)

print(e)

Tensor("a:0", dtype=float32)

Tensor("a_1:0", dtype=float32)

Tensor("a_2:0", dtype=float32)

show_graph(tf.get_default_graph())

reset_graph()

del a,b,e

In Tensorflow, building the graph and executing it are two separate steps:

- build a graph

- evaluate (parts of) it once or multiple times

Evaluation happens in a Session

sess = tf.Session() # open a Session

value_of_e = sess.run(e) # evaluate node 'e'

print("value of e is ", value_of_e)

sess.close() # release resources after use

# build graph

a = tf.placeholder(tf.float32, name="a")

b = tf.placeholder(tf.float32, name="b")

c = a+b

d = b+1

e = c*d

# now evaluate

sess = tf.Session() # open a Session

value_of_e = sess.run(e) # evaluate node 'e'

print("value of e is ", value_of_e)

sess.close() # release resources after use

InvalidArgumentErrorTraceback (most recent call last) <ipython-input-253-636daf6e9793> in <module>() 9 sess = tf.Session() # open a Session 10 ---> 11 value_of_e = sess.run(e) # evaluate node 'e' 12 print("value of e is ", value_of_e) 13 /usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.pyc in run(self, fetches, feed_dict, options, run_metadata) 893 try: 894 result = self._run(None, fetches, feed_dict, options_ptr, --> 895 run_metadata_ptr) 896 if run_metadata: 897 proto_data = tf_session.TF_GetBuffer(run_metadata_ptr) /usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.pyc in _run(self, handle, fetches, feed_dict, options, run_metadata) 1122 if final_fetches or final_targets or (handle and feed_dict_tensor): 1123 results = self._do_run(handle, final_targets, final_fetches, -> 1124 feed_dict_tensor, options, run_metadata) 1125 else: 1126 results = [] /usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.pyc in _do_run(self, handle, target_list, fetch_list, feed_dict, options, run_metadata) 1319 if handle is None: 1320 return self._do_call(_run_fn, self._session, feeds, fetches, targets, -> 1321 options, run_metadata) 1322 else: 1323 return self._do_call(_prun_fn, self._session, handle, feeds, fetches) /usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.pyc in _do_call(self, fn, *args) 1338 except KeyError: 1339 pass -> 1340 raise type(e)(node_def, op, message) 1341 1342 def _extend_graph(self): InvalidArgumentError: You must feed a value for placeholder tensor 'a' with dtype float [[Node: a = Placeholder[dtype=DT_FLOAT, shape=<unknown>, _device="/job:localhost/replica:0/task:0/gpu:0"]()]] Caused by op u'a', defined at: File "/usr/lib/python2.7/runpy.py", line 174, in _run_module_as_main "__main__", fname, loader, pkg_name) File "/usr/lib/python2.7/runpy.py", line 72, in _run_code exec code in run_globals File "/usr/lib/python2.7/dist-packages/ipykernel/__main__.py", line 3, in <module> app.launch_new_instance() File "/usr/lib/python2.7/dist-packages/traitlets/config/application.py", line 658, in launch_instance app.start() File "/usr/lib/python2.7/dist-packages/ipykernel/kernelapp.py", line 474, in start ioloop.IOLoop.instance().start() File "/usr/lib/python2.7/dist-packages/zmq/eventloop/ioloop.py", line 177, in start super(ZMQIOLoop, self).start() File "/usr/lib/python2.7/dist-packages/tornado/ioloop.py", line 887, in start handler_func(fd_obj, events) File "/usr/lib/python2.7/dist-packages/tornado/stack_context.py", line 275, in null_wrapper return fn(*args, **kwargs) File "/usr/lib/python2.7/dist-packages/zmq/eventloop/zmqstream.py", line 440, in _handle_events self._handle_recv() File "/usr/lib/python2.7/dist-packages/zmq/eventloop/zmqstream.py", line 472, in _handle_recv self._run_callback(callback, msg) File "/usr/lib/python2.7/dist-packages/zmq/eventloop/zmqstream.py", line 414, in _run_callback callback(*args, **kwargs) File "/usr/lib/python2.7/dist-packages/tornado/stack_context.py", line 275, in null_wrapper return fn(*args, **kwargs) File "/usr/lib/python2.7/dist-packages/ipykernel/kernelbase.py", line 276, in dispatcher return self.dispatch_shell(stream, msg) File "/usr/lib/python2.7/dist-packages/ipykernel/kernelbase.py", line 228, in dispatch_shell handler(stream, idents, msg) File "/usr/lib/python2.7/dist-packages/ipykernel/kernelbase.py", line 390, in execute_request user_expressions, allow_stdin) File "/usr/lib/python2.7/dist-packages/ipykernel/ipkernel.py", line 196, in do_execute res = shell.run_cell(code, store_history=store_history, silent=silent) File "/usr/lib/python2.7/dist-packages/ipykernel/zmqshell.py", line 501, in run_cell return super(ZMQInteractiveShell, self).run_cell(*args, **kwargs) File "/usr/lib/python2.7/dist-packages/IPython/core/interactiveshell.py", line 2717, in run_cell interactivity=interactivity, compiler=compiler, result=result) File "/usr/lib/python2.7/dist-packages/IPython/core/interactiveshell.py", line 2821, in run_ast_nodes if self.run_code(code, result): File "/usr/lib/python2.7/dist-packages/IPython/core/interactiveshell.py", line 2881, in run_code exec(code_obj, self.user_global_ns, self.user_ns) File "<ipython-input-253-636daf6e9793>", line 2, in <module> a = tf.placeholder(tf.float32, name="a") File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/ops/array_ops.py", line 1548, in placeholder return gen_array_ops._placeholder(dtype=dtype, shape=shape, name=name) File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/ops/gen_array_ops.py", line 2094, in _placeholder name=name) File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/op_def_library.py", line 767, in apply_op op_def=op_def) File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.py", line 2630, in create_op original_op=self._default_original_op, op_def=op_def) File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.py", line 1204, in __init__ self._traceback = self._graph._extract_stack() # pylint: disable=protected-access InvalidArgumentError (see above for traceback): You must feed a value for placeholder tensor 'a' with dtype float [[Node: a = Placeholder[dtype=DT_FLOAT, shape=<unknown>, _device="/job:localhost/replica:0/task:0/gpu:0"]()]]

InvalidArgumentErrorTraceback (most recent call last)

<ipython-input-96-b2928f055672> in <module>()

7 sess = tf.Session() # open a Session

8

----> 9 value_of_e = sess.run(e) # evaluate node 'e'

10 print("value of e is ", value_of_e)

11

...

1338 except KeyError:

1339 pass

-> 1340 raise type(e)(node_def, op, message)

1341

1342 def _extend_graph(self):

InvalidArgumentError: You must feed a value for placeholder tensor 'b' with dtype float

[[Node: b = Placeholder[dtype=DT_FLOAT, shape=<unknown>, _device="/job:localhost/replica:0/task:0/cpu:0"]()]]

To evaluate a node, parents must have values, or must be evaluatable.

...same graph as before...

sess = tf.Session() # open a Session

value_of_e = sess.run(e, feed_dict={a: 2, b: 1}) # evaluate node 'e' for some a/b

print("e evaluated for a=2 and b=1 is ", value_of_e)

sess.close() # be a good citizen, release resources after use

e evaluated for a=2 and b=1 is 6.0

sess = tf.Session()

value_of_e = sess.run(e, feed_dict={a: 3, b: 1})

print("e evaluated for a=3 and b=1 is ", value_of_e)

sess.close()

e evaluated for a=3 and b=1 is 8.0

sess = tf.Session()

value = sess.run(e, feed_dict={a: 2, b: 1, c: -1}) # we can specify values for other

print("e evaluated for c=-1 is ", value) # nodes, not just placeholders

value = sess.run(e, feed_dict={b: 1, c: -1}) # if c has a value, a isn't needed

print("e evaluated for c=-1 is ", value)

sess.close()

e evaluated for c=-1 is -2.0 e evaluated for c=-1 is -2.0

sess = tf.Session()

othervalue = sess.run(e, feed_dict={c: -1}) # only c is now enough to evaluate e

sess.close()

InvalidArgumentErrorTraceback (most recent call last) <ipython-input-257-985a2d7ad3cc> in <module>() 1 sess = tf.Session() 2 ----> 3 othervalue = sess.run(e, feed_dict={c: -1}) # only c is now enough to evaluate e 4 5 sess.close() /usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.pyc in run(self, fetches, feed_dict, options, run_metadata) 893 try: 894 result = self._run(None, fetches, feed_dict, options_ptr, --> 895 run_metadata_ptr) 896 if run_metadata: 897 proto_data = tf_session.TF_GetBuffer(run_metadata_ptr) /usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.pyc in _run(self, handle, fetches, feed_dict, options, run_metadata) 1122 if final_fetches or final_targets or (handle and feed_dict_tensor): 1123 results = self._do_run(handle, final_targets, final_fetches, -> 1124 feed_dict_tensor, options, run_metadata) 1125 else: 1126 results = [] /usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.pyc in _do_run(self, handle, target_list, fetch_list, feed_dict, options, run_metadata) 1319 if handle is None: 1320 return self._do_call(_run_fn, self._session, feeds, fetches, targets, -> 1321 options, run_metadata) 1322 else: 1323 return self._do_call(_prun_fn, self._session, handle, feeds, fetches) /usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.pyc in _do_call(self, fn, *args) 1338 except KeyError: 1339 pass -> 1340 raise type(e)(node_def, op, message) 1341 1342 def _extend_graph(self): InvalidArgumentError: You must feed a value for placeholder tensor 'b' with dtype float [[Node: b = Placeholder[dtype=DT_FLOAT, shape=<unknown>, _device="/job:localhost/replica:0/task:0/gpu:0"]()]] Caused by op u'b', defined at: File "/usr/lib/python2.7/runpy.py", line 174, in _run_module_as_main "__main__", fname, loader, pkg_name) File "/usr/lib/python2.7/runpy.py", line 72, in _run_code exec code in run_globals File "/usr/lib/python2.7/dist-packages/ipykernel/__main__.py", line 3, in <module> app.launch_new_instance() File "/usr/lib/python2.7/dist-packages/traitlets/config/application.py", line 658, in launch_instance app.start() File "/usr/lib/python2.7/dist-packages/ipykernel/kernelapp.py", line 474, in start ioloop.IOLoop.instance().start() File "/usr/lib/python2.7/dist-packages/zmq/eventloop/ioloop.py", line 177, in start super(ZMQIOLoop, self).start() File "/usr/lib/python2.7/dist-packages/tornado/ioloop.py", line 887, in start handler_func(fd_obj, events) File "/usr/lib/python2.7/dist-packages/tornado/stack_context.py", line 275, in null_wrapper return fn(*args, **kwargs) File "/usr/lib/python2.7/dist-packages/zmq/eventloop/zmqstream.py", line 440, in _handle_events self._handle_recv() File "/usr/lib/python2.7/dist-packages/zmq/eventloop/zmqstream.py", line 472, in _handle_recv self._run_callback(callback, msg) File "/usr/lib/python2.7/dist-packages/zmq/eventloop/zmqstream.py", line 414, in _run_callback callback(*args, **kwargs) File "/usr/lib/python2.7/dist-packages/tornado/stack_context.py", line 275, in null_wrapper return fn(*args, **kwargs) File "/usr/lib/python2.7/dist-packages/ipykernel/kernelbase.py", line 276, in dispatcher return self.dispatch_shell(stream, msg) File "/usr/lib/python2.7/dist-packages/ipykernel/kernelbase.py", line 228, in dispatch_shell handler(stream, idents, msg) File "/usr/lib/python2.7/dist-packages/ipykernel/kernelbase.py", line 390, in execute_request user_expressions, allow_stdin) File "/usr/lib/python2.7/dist-packages/ipykernel/ipkernel.py", line 196, in do_execute res = shell.run_cell(code, store_history=store_history, silent=silent) File "/usr/lib/python2.7/dist-packages/ipykernel/zmqshell.py", line 501, in run_cell return super(ZMQInteractiveShell, self).run_cell(*args, **kwargs) File "/usr/lib/python2.7/dist-packages/IPython/core/interactiveshell.py", line 2717, in run_cell interactivity=interactivity, compiler=compiler, result=result) File "/usr/lib/python2.7/dist-packages/IPython/core/interactiveshell.py", line 2821, in run_ast_nodes if self.run_code(code, result): File "/usr/lib/python2.7/dist-packages/IPython/core/interactiveshell.py", line 2881, in run_code exec(code_obj, self.user_global_ns, self.user_ns) File "<ipython-input-253-636daf6e9793>", line 3, in <module> b = tf.placeholder(tf.float32, name="b") File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/ops/array_ops.py", line 1548, in placeholder return gen_array_ops._placeholder(dtype=dtype, shape=shape, name=name) File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/ops/gen_array_ops.py", line 2094, in _placeholder name=name) File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/op_def_library.py", line 767, in apply_op op_def=op_def) File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.py", line 2630, in create_op original_op=self._default_original_op, op_def=op_def) File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.py", line 1204, in __init__ self._traceback = self._graph._extract_stack() # pylint: disable=protected-access InvalidArgumentError (see above for traceback): You must feed a value for placeholder tensor 'b' with dtype float [[Node: b = Placeholder[dtype=DT_FLOAT, shape=<unknown>, _device="/job:localhost/replica:0/task:0/gpu:0"]()]]

# 'with' construct closes sess automatically after use

with tf.Session() as sess:

value = sess.run(e, feed_dict={a: 2, b: 1})

print("e=", value)

e= 6.0

Some caveats

always be aware of the difference between python variables and nodes in the tensorflow graph

reset_graph()

del a,b,c,d,e

# what does this code do?

a = tf.placeholder(tf.float32, name="a")

b = a+1

b = tf.log(b, name="b") # we can re-use python variables

b = b+1

print(a)

print(b)

Tensor("a:0", dtype=float32)

Tensor("add_1:0", dtype=float32)

with tf.Session() as sess:

value = sess.run(b, feed_dict={a: 2.})

print("value=", value)

value= 2.09861

reset_graph()

# what does this code do?

a = tf.placeholder(tf.float32, name="a")

a = a+1

a = tf.log(a, name="b") # we can re-use python variables

a = a+1

with tf.Session() as sess:

value = sess.run(a, feed_dict={a: 2.})

print("value=", value)

value= 2.0

print(a)

Tensor("add_1:0", dtype=float32)

reset_graph()

del a

Calling a python function will execute it immediately. If a function operates on tensors, typically, this means it will add nodes to the graph.

def f(x):

return x+1

a = tf.placeholder(tf.float32, name="a")

b = f(a)

b = tf.log(b)

b = f(b)

with tf.Session() as sess:

value = sess.run(b, feed_dict={a: 2.})

print("value=", value)

value= 2.09861

reset_graph()

del a,b

other useful constructs

one can evaluate multiple nodes at the same time. graph nodes will evaluated at most once.¶

reset_graph()

a = tf.constant(2, name="a")

b = tf.constant(1, name="b")

c = a+b

d = b+1

e = c*d

with tf.Session() as sess:

value_of_c = sess.run(c)

print("c=", value_of_c)

value_of_d = sess.run(d)

print("d=", value_of_d)

value_of_e = sess.run(e) # recomputes c and d

print("e=", value_of_e)

c= 3 d= 2 e= 6

with tf.Session() as sess:

value_of_c,value_of_d,value_of_e = sess.run([c,d,e])

# c and d are evaluated only once

print("c=", value_of_c)

print("d=", value_of_d)

print("e=", value_of_e)

c= 3 d= 2 e= 6

Helper routines for graphs (many more exist)¶

# get tensorflow graph as python object

g=tf.get_default_graph()

print( g.get_operations() ) # quick check what's inside

[<tf.Operation 'a' type=Const>, <tf.Operation 'b' type=Const>, <tf.Operation 'add' type=Add>, <tf.Operation 'add_1/y' type=Const>, <tf.Operation 'add_1' type=Add>, <tf.Operation 'mul' type=Mul>]

# one cannot delete individual nodes from the graph

# but one can delete all, starting again with an empty graph

tf.reset_default_graph()

g=tf.get_default_graph()

print( g.get_operations() ) # nothing inside

[]

More caveats

beware of unvoluntarily changing the graph

reset_graph()

a = tf.constant(5)

b = tf.constant(2)

with tf.Session() as sess:

for i in range(10):

value = sess.run(a+b)

print("i=", i, "i*value=", i*value)

i= 0 i*value= 0 i= 1 i*value= 7 i= 2 i*value= 14 i= 3 i*value= 21 i= 4 i*value= 28 i= 5 i*value= 35 i= 6 i*value= 42 i= 7 i*value= 49 i= 8 i*value= 56 i= 9 i*value= 63

# each construct 'a+b' creates a corresponding node in the graph

show_graph(tf.get_default_graph())

reset_graph()

to make sure you don't change the graph anymore, 'finalize' it.¶

a = tf.constant(5)

b = tf.constant(2)

g = tf.get_default_graph()

g.finalize() # allow no more changes to the graph

with tf.Session() as sess:

for i in range(10):

value = sess.run(a+b) # error, a new node would be created

print("i=", i, "i*value=", i*value)

RuntimeErrorTraceback (most recent call last) <ipython-input-280-9c1a9a0614ba> in <module>() 7 with tf.Session() as sess: 8 for i in range(10): ----> 9 value = sess.run(a+b) # error, a new node would be created 10 print("i=", i, "i*value=", i*value) /usr/local/lib/python2.7/dist-packages/tensorflow/python/ops/math_ops.pyc in binary_op_wrapper(x, y) 863 else: 864 raise --> 865 return func(x, y, name=name) 866 867 def binary_op_wrapper_sparse(sp_x, y): /usr/local/lib/python2.7/dist-packages/tensorflow/python/ops/gen_math_ops.pyc in add(x, y, name) 78 A `Tensor`. Has the same type as `x`. 79 """ ---> 80 result = _op_def_lib.apply_op("Add", x=x, y=y, name=name) 81 return result 82 /usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/op_def_library.pyc in apply_op(self, op_type_name, name, **keywords) 765 op = g.create_op(op_type_name, inputs, output_types, name=scope, 766 input_types=input_types, attrs=attr_protos, --> 767 op_def=op_def) 768 if output_structure: 769 outputs = op.outputs /usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.pyc in create_op(self, op_type, inputs, dtypes, input_types, name, attrs, op_def, compute_shapes, compute_device) 2580 2581 """ -> 2582 self._check_not_finalized() 2583 for idx, a in enumerate(inputs): 2584 if not isinstance(a, Tensor): /usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.pyc in _check_not_finalized(self) 2288 """ 2289 if self._finalized: -> 2290 raise RuntimeError("Graph is finalized and cannot be modified.") 2291 2292 def _add_op(self, op): RuntimeError: Graph is finalized and cannot be modified.

reset_graph()

solution: only evaluate single tensors or lists of tensors

do not run 'run' on other constructs

a = tf.constant(5)

b = tf.constant(2)

a_plus_b = a+b

with tf.Session() as sess:

for i in range(10):

value = sess.run(a_plus_b)

print("i=", i, "i*value=", i*value)

i= 0 i*value= 0 i= 1 i*value= 7 i= 2 i*value= 14 i= 3 i*value= 21 i= 4 i*value= 28 i= 5 i*value= 35 i= 6 i*value= 42 i= 7 i*value= 49 i= 8 i*value= 56 i= 9 i*value= 63

show_graph(tf.get_default_graph())

Variables

cool feature: tensorflow graphs are 'stateful'

Variables store values and keep them between different evaluations

reset_graph()

a = tf.Variable(0, name="var") # new variable, to be initialized with value 0

b = a+1

inc = tf.assign(a, b, name="assignment") # shorthand: a.assign(b)

# this creates a node called 'assignment'

# evaluating 'assignment' causes the value of 'b' to be assigned to 'a'

# 'inc' is a python variable that points to the assignment node

print(a)

print(b)

print(inc)

print()

with tf.Session() as sess:

sess.run(a.initializer) # initialize variable 'a'

for i in range(5):

value = sess.run(inc)

print("step ", i, "value=", value)

<tf.Variable 'var:0' shape=() dtype=int32_ref>

Tensor("add:0", shape=(), dtype=int32)

Tensor("assignment:0", shape=(), dtype=int32_ref)

step 0 value= 1

step 1 value= 2

step 2 value= 3

step 3 value= 4

step 4 value= 5

show_graph(tf.get_default_graph())

reset_graph()

use of 'assign' is crucial. THIS, e.g., does not work¶

a = tf.Variable(0, name="a") # new variable, to be initialized with value 0

b = tf.add(a, 1) # values of 'a' plus 1

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(a.initializer) # initialize variable 'a'

for i in range(5):

value = sess.run(b) # evaluating 'b' does not change 'a'

print("step ", i, "value=", value)

step 0 value= 1 step 1 value= 1 step 2 value= 1 step 3 value= 1 step 4 value= 1

reset_graph()

use of 'assign' is crucial. Don't do THIS, either¶

a = tf.Variable(0, name="a") # new variable

with tf.Session() as sess:

sess.run(a.initializer) # assign value 0 to variable 'a'

for i in range(5):

a = a+1 # creates new node in every step: a+1, a+1+1,...

value = sess.run(a)

print("step ", i, "value=", value)

# in a real program, this would run out of memory quickly!

step 0 value= 1 step 1 value= 2 step 2 value= 3 step 3 value= 4 step 4 value= 5

show_graph(tf.get_default_graph())

reset_graph()

back to 'assign'. This is the right thing to do.¶

a = tf.Variable(0, name="a")

inc = a.assign(a+1) # convenient shorthand for tf.assign(a,...)

with tf.Session() as sess:

sess.run(a.initializer) # initialize variable 'a'

for i in range(5):

value = sess.run(inc)

print("step ", i, "value=", value)

step 0 value= 1 step 1 value= 2 step 2 value= 3 step 3 value= 4 step 4 value= 5

reset_graph()

Variables can be initialized in different ways¶

# with a numeric constant, as before

a = tf.Variable(1, name="a")

print(a)

# with a numpy object

random_numpy = np.random.randn()

b = tf.Variable(random_numpy, name="c")

print(b)

# with a (here generated) tensorflow constant

random_tensor = tf.random_normal([], mean=1, stddev=0.5)

c = tf.Variable(random_tensor, name="b")

print(c)

# with another tensorflow variable

d = tf.Variable(a, name="d")

print(d)

# every call creates a new variable (accessing old ones: later)

e = tf.Variable(1, name="a")

print(e)

<tf.Variable 'a:0' shape=() dtype=int32_ref> <tf.Variable 'c:0' shape=() dtype=float32_ref> <tf.Variable 'b:0' shape=() dtype=float32_ref> <tf.Variable 'd:0' shape=() dtype=int32_ref> <tf.Variable 'a_1:0' shape=() dtype=int32_ref>

initializing all variables separately would be tedious

there's convenient helper functions

init = tf.global_variables_initializer() # creates a new node in the graph

with tf.Session() as sess:

sess.run(init)

res_a,res_b,res_c,res_d,res_e = sess.run([a,b,c,d,e])

print("a=",res_a)

print("b=",res_b)

print("c=",res_c)

print("d=",res_d)

print("e=",res_e)

a= 1 b= 0.496714 c= 0.841961 d= 1 e= 1

Tensors

for us, tensors are just arbitrary-dimensional analogs of matrices

scalar: 0-dim. $X$, e.g. $$5$$

vector: 1-dim, $X[i]$, e.g. $$\begin{pmatrix}3&-1&0.5\end{pmatrix} \quad\text{or}\quad\begin{pmatrix}3\\-1\\0.5\end{pmatrix}$$

Tensors

for us, tensors are just arbitrary-dimensional analogs of matrices

matrix: 2-dim, $X[i,j]$, e.g. $$\begin{pmatrix}0&1\\-1&0\end{pmatrix}$$

tensor: any dim, e.g. $X[i,j,k,l]$

Everything we did before works with tensors as well¶

reset_graph()

a = tf.placeholder(tf.float32,[5,3,2]) # 3-dim tensor of size 5x3x2

print(a)

# with a list of number -> vector

digits = tf.constant([3,1,4]) # constant 1-dim tensor with entries [3,1,4]

print(digits)

# initialize a variable with a numpy object

random_numpy = np.random.randn(3,3,1,3) # 4-dim tensor of size 3x3x1x3

c = tf.Variable(random_numpy)

print(c)

# most functions operate componentwise on tensors

d = tf.exp(c+1)

print(d)

# special functions change the shape, e.g. "reductions"

e = tf.reduce_max(d) # find maximum of all entries

f = tf.reduce_sum(d, axis=0) # sum along first axis

print(e)

print(f)

Tensor("Placeholder:0", shape=(5, 3, 2), dtype=float32)

Tensor("Const:0", shape=(3,), dtype=int32)

<tf.Variable 'Variable:0' shape=(3, 3, 1, 3) dtype=float64_ref>

Tensor("Exp:0", shape=(3, 3, 1, 3), dtype=float64)

Tensor("Max:0", shape=(), dtype=float64)

Tensor("Sum:0", shape=(3, 1, 3), dtype=float64)

Note: take care which way you do things, python or tensorflow¶

# e.g. get a tensor's shape

s = c.shape # result is a python object: a list of length 3

print(s)

(3, 3, 1, 3)

# e.g. get a tensor's shape

s = tf.shape(c) # result is a node in the graph that holds a vector of length 3

print(s)

Tensor("Shape:0", shape=(4,), dtype=int32)

# e.g. get a tensor's shape

s = tf.shape(c) # result is a node in the graph that holds a vector of length 3

with tf.Session() as sess:

val = sess.run(s) # evaluate

print(val)

[3 3 1 3]

Example: Linear Regression¶

First: using the closed-form equations¶

reset_graph()

import urllib2

# let's load some data in numpy

fid = urllib2.urlopen("https://cvml.ist.ac.at/courses/DLWT_W17/data/Xtrain.txt")

Xdata = np.loadtxt(fid)

fid = urllib2.urlopen("https://cvml.ist.ac.at/courses/DLWT_W17/data/Ytrain.txt")

Ydata = np.loadtxt(fid).reshape(-1, 1)

print("data shape = ", Xdata.shape)

print("labels shape = ", Ydata.shape)

Xtrn,Ytrn = Xdata[::2],Ydata[::2] # half of the points for training

ntrn,dim = Xtrn.shape

Xval,Yval = Xdata[1::2],Ydata[1::2] # rest for model evaluation

data shape = (1213, 576) labels shape = (1213, 1)

least-squares regression:

$$\text{inputs:}X=\begin{pmatrix}x_1\\x_2\\\vdots\\x_n\end{pmatrix}\in\mathbb{R}^{n\times d} \ \text{outputs:}Y=\begin{pmatrix}y_1\\y_2\\\vdots\\y_n\end{pmatrix}\in\mathbb{R}^{n\times 1} $$

task: solve

$$w^\ast=\operatorname{argmin}_w \frac{1}{n}\sum_{i=1}^n (w^\top x_i - y_i)^2$$

closed-form solution: $$w^\ast = (X^\top X)^{-1}X^\top Y$$¶

closed-form solution: $$w^\ast = (X^\top X)^{-1}X^\top Y$$¶

# numpy solution

XtX = np.dot(Xtrn.T, Xtrn)

XtY = np.dot(Xtrn.T, Ytrn)

w = np.dot(np.linalg.inv(XtX), XtY)

print("w[:5]=", w[:5].T)

w[:5]= [[ 63.0397397 -102.76813244 55.63665877 -40.55369632 41.6927566 ]]

$$\text{loss of any $w$:}\qquad L(w) = \frac{1}{n}\sum_{i=1}^n (w^\top x_i - y_i)^2$$

pred = np.dot(Xtrn, w)

loss = np.mean(np.square(pred-Ytrn))

print("loss on training data =", loss)

pred_new = np.dot(Xval, w)

loss_new = np.mean(np.square(pred_new-Yval))

print("loss on new data=", loss_new)

loss on training data = 0.0163182666571 loss on new data= 53.6288760381

reset_graph()

The same in tensorflow:

# define graph

X = tf.constant(Xtrn, name="Xtrn")

Y = tf.constant(Ytrn, name="Ytrn")

Xt = tf.transpose(X, name="Xtranspose")

XtX = tf.matmul(Xt, X, name="XtX")

XtY = tf.matmul(Xt, Y, name="XtY")

w = tf.matmul(tf.matrix_inverse(XtX), XtY, name="w")

print("w:", w)

w: Tensor("w:0", shape=(576, 1), dtype=float64)

pred = tf.matmul(X, w, name="pred")

loss = tf.reduce_mean(tf.square(pred-Y), name="loss") # mean of tensor elements

# evaluate graph

with tf.Session() as sess:

w_value,loss_value = sess.run([w, loss])

print("w[:5]=", w_value[:5].T)

print("loss on training data =", loss_value)

w[:5]= [[ 63.03974311 -102.76813954 55.63666054 -40.55369826 41.69276449]] loss on training data = 0.0163182666571

show_graph(tf.get_default_graph())

# additional graph nodes

Xnew = tf.constant(Xval, name="Xnew")

Ynew = tf.constant(Yval, name="Ynew")

prednew = tf.matmul(Xnew, w, name="prednew")

lossnew = tf.reduce_mean(tf.square(prednew-Ynew), name="lossnew")

with tf.Session() as sess:

loss_val = sess.run(lossnew)

print("loss on new data=", loss_new)

loss on new data= 53.6288760381

reset_graph()

More convinient with placeholders¶

reset_graph()

X = tf.placeholder(dtype=tf.float64, name="Xtrn")

Y = tf.placeholder(dtype=tf.float64, name="Ytrn")

Xt = tf.transpose(X, name="Xtranspose")

XtX = tf.matmul(Xt, X, name="XtX")

XtY = tf.matmul(Xt, Y, name="XtY")

w = tf.matmul(tf.matrix_inverse(XtX), XtY, name="w")

pred = tf.matmul(X, w, name="pred")

loss = tf.reduce_mean(tf.square(pred-Y),name="loss")

with tf.Session() as sess:

w_value = sess.run(w, feed_dict={X: Xtrn, Y:Ytrn})

loss_train = sess.run(loss, feed_dict={X: Xtrn, Y:Ytrn})

print("w[:5]=", w_value[:5].T)

print("loss on training data =", loss_train)

loss_val = sess.run(loss, feed_dict={X: Xval, Y:Yval})

print("loss on validation data =", loss_val)

w[:5]= [[ 63.03974257 -102.76813889 55.63666194 -40.55369348 41.69275296]] loss on training data = 0.0163182666571 loss on validation data = 0.017969949099

Wrong! Evaluating 'loss' on Xval recomputes $w$ from new X/Y-data!

reset_graph()

Good use of a variable: keep parameter between evaluations

X = tf.placeholder(dtype=tf.float64, name="X")

Y = tf.placeholder(dtype=tf.float64, name="Y")

w = tf.Variable(tf.zeros(shape=(dim,1), dtype=tf.float64), name="w")

Xt = tf.transpose(X, name="Xtranspose")

XtX = tf.matmul(Xt, X, name="XtX")

XtY = tf.matmul(Xt, Y, name="XtY")

compute_w = w.assign( tf.matmul(tf.matrix_inverse(XtX), XtY, name="w") )

pred = tf.matmul(X, w, name="pred")

loss = tf.reduce_mean(tf.square(pred-Y), name="loss")

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

w_value = sess.run(compute_w, feed_dict={X: Xtrn, Y:Ytrn})

print("w[:5]=", w_value[:5].T)

loss_train = sess.run(loss, feed_dict={X: Xtrn, Y:Ytrn})

print("loss on training data =", loss_train)

loss_val = sess.run(loss, feed_dict={X: Xval, Y:Yval})

print("loss on validation data =", loss_val)

w[:5]= [[ 63.03974257 -102.76813889 55.63666194 -40.55369348 41.69275296]] loss on training data = 0.0163182666571 loss on validation data = 53.628877667

show_graph(tf.get_default_graph())

Using (Batch) Gradient Descent

$$f(w) = \frac{1}{n}\sum_{i=1}^n (w^\top x_i - y_i)^2$$

Algorithm:

$$w_0 \leftarrow 0$$

repeat until convergence:

$$w_{t+1} \leftarrow w_t - \eta\nabla_w f(w_t)$$

reset_graph()

nsteps = 10000

eta = 0.005

X = tf.placeholder(dtype=tf.float32, name="X")

Y = tf.placeholder(dtype=tf.float32, name="Y")

w = tf.Variable(tf.zeros(shape=(dim,1), dtype=tf.float32), name="w")

pred = tf.matmul(X, w, name="pred")

residual = pred-Y

loss = tf.reduce_mean(tf.square(residual), name="loss")

Xt = tf.transpose(X, name="Xtranspose")

gradient_of_loss = 2./ntrn * tf.matmul(Xt, residual) # derived on paper!

update_w = w.assign( w - eta*gradient_of_loss )

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init) # init variables

for i in range(nsteps):

sess.run(update_w, feed_dict={X: Xtrn, Y:Ytrn}) # execute update to w

if i%1000 == 0:

loss_train = sess.run(loss, feed_dict={X: Xtrn, Y:Ytrn})

loss_val = sess.run(loss, feed_dict={X: Xval, Y:Yval})

print("step", i, "loss(train)", loss_train, "loss(val)", loss_val)

step 0 loss(train) 0.993715 loss(val) 0.987678 step 1000 loss(train) 0.394694 loss(val) 0.446139 step 2000 loss(train) 0.349569 loss(val) 0.421518 step 3000 loss(train) 0.330264 loss(val) 0.414118 step 4000 loss(train) 0.318032 loss(val) 0.410647 step 5000 loss(train) 0.309016 loss(val) 0.408663 step 6000 loss(train) 0.301906 loss(val) 0.407509 step 7000 loss(train) 0.29608 loss(val) 0.406911 step 8000 loss(train) 0.291178 loss(val) 0.406709 step 9000 loss(train) 0.286972 loss(val) 0.406793

one of the main benefits of using tensorflow:

automatic differentiation

(i.e. gradients can be computed automatically)

reset_graph()

nsteps = 10000

eta = 0.005

X = tf.placeholder(dtype=tf.float32, name="X")

Y = tf.placeholder(dtype=tf.float32, name="Y")

w = tf.Variable(tf.zeros(shape=(dim,1), dtype=tf.float32), name="w")

pred = tf.matmul(X, w, name="pred")

residual = pred-Y

loss = tf.reduce_mean(tf.square(residual), name="loss")

gradient_of_loss = tf.gradients(loss, [w])[0] # <-- automatic differentiation

update_w = w.assign( w - eta*gradient_of_loss )

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init) # init variables

for i in range(nsteps):

sess.run(update_w, feed_dict={X: Xtrn, Y:Ytrn}) # execute update to w

if i%1000 == 0:

loss_train = sess.run(loss, feed_dict={X: Xtrn, Y:Ytrn})

loss_val = sess.run(loss, feed_dict={X: Xval, Y:Yval})

print("step", i, "loss(train)", loss_train, "loss(val)", loss_val)

step 0 loss(train) 0.993715 loss(val) 0.987678 step 1000 loss(train) 0.394694 loss(val) 0.446139 step 2000 loss(train) 0.349569 loss(val) 0.421518 step 3000 loss(train) 0.330264 loss(val) 0.414118 step 4000 loss(train) 0.318032 loss(val) 0.410647 step 5000 loss(train) 0.309016 loss(val) 0.408663 step 6000 loss(train) 0.301906 loss(val) 0.407509 step 7000 loss(train) 0.29608 loss(val) 0.406912 step 8000 loss(train) 0.291178 loss(val) 0.406709 step 9000 loss(train) 0.286972 loss(val) 0.406793

show_graph(tf.get_default_graph())

# numpy

def my_func(a, b):

z = 0

for i in range(10):

z = a * np.cos(z + i) + z * np.sin(b - i)

return z

# we can evaluate the function for any a,b

print(my_func(0.2, 0.3))

-0.0403213363474

reset_graph()

# In tensorflow, we can not just evaluate the function,

# but also its partial derivatives without (much) overhead

a = tf.Variable(0.2, name="a")

b = tf.Variable(0.3, name="b")

z = tf.constant(0.0, name="z")

for i in range(10):

z = a * tf.cos(z + i) + z * tf.sin(b - i) # this builds a complex graph

grads = tf.gradients(z, [a, b]) # gradient of z with respect to a and b

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

val = sess.run(z)

print("val=", val)

g = sess.run(grads)

print("gradient=", g)

val= -0.0403213 gradient= [0.20793509, 0.16816998]

show_graph(tf.get_default_graph())

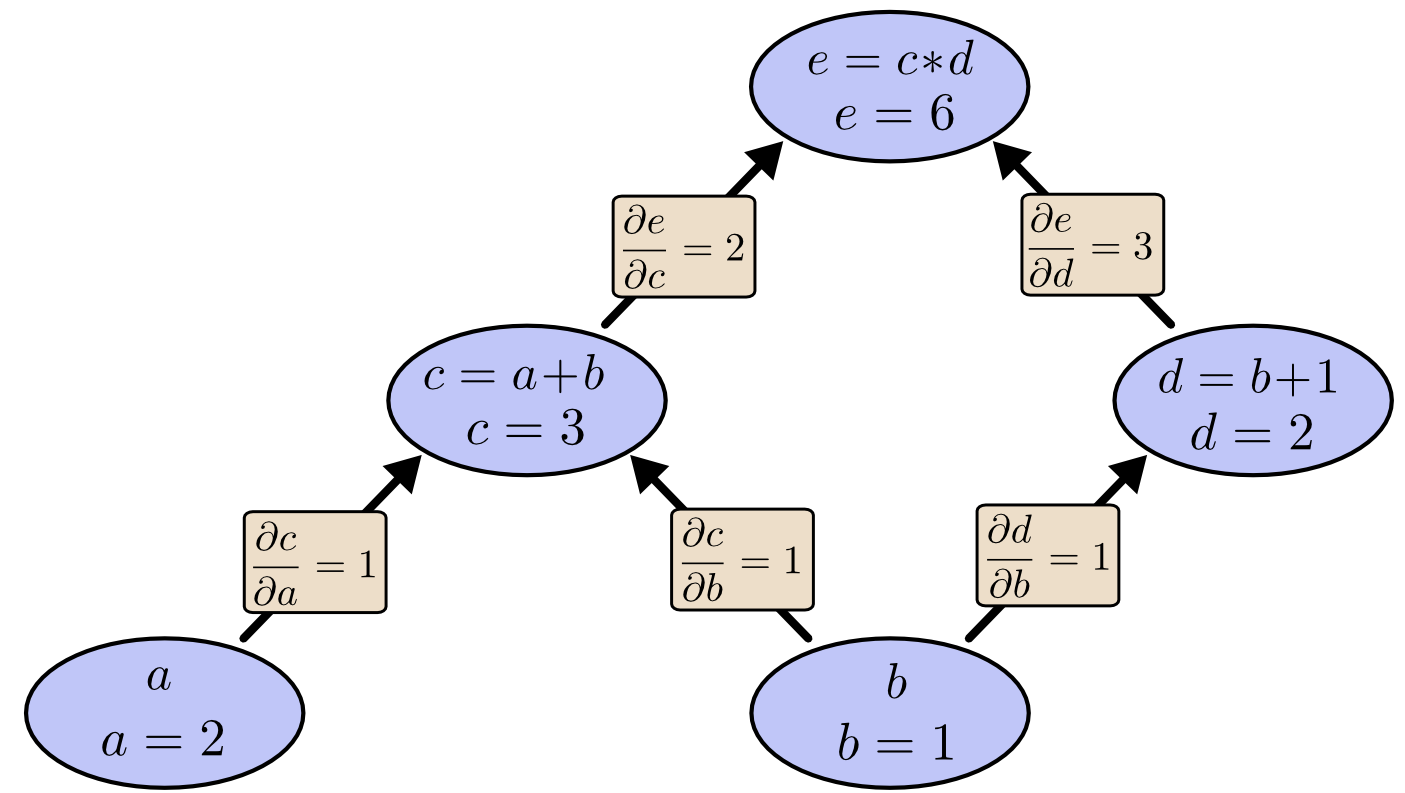

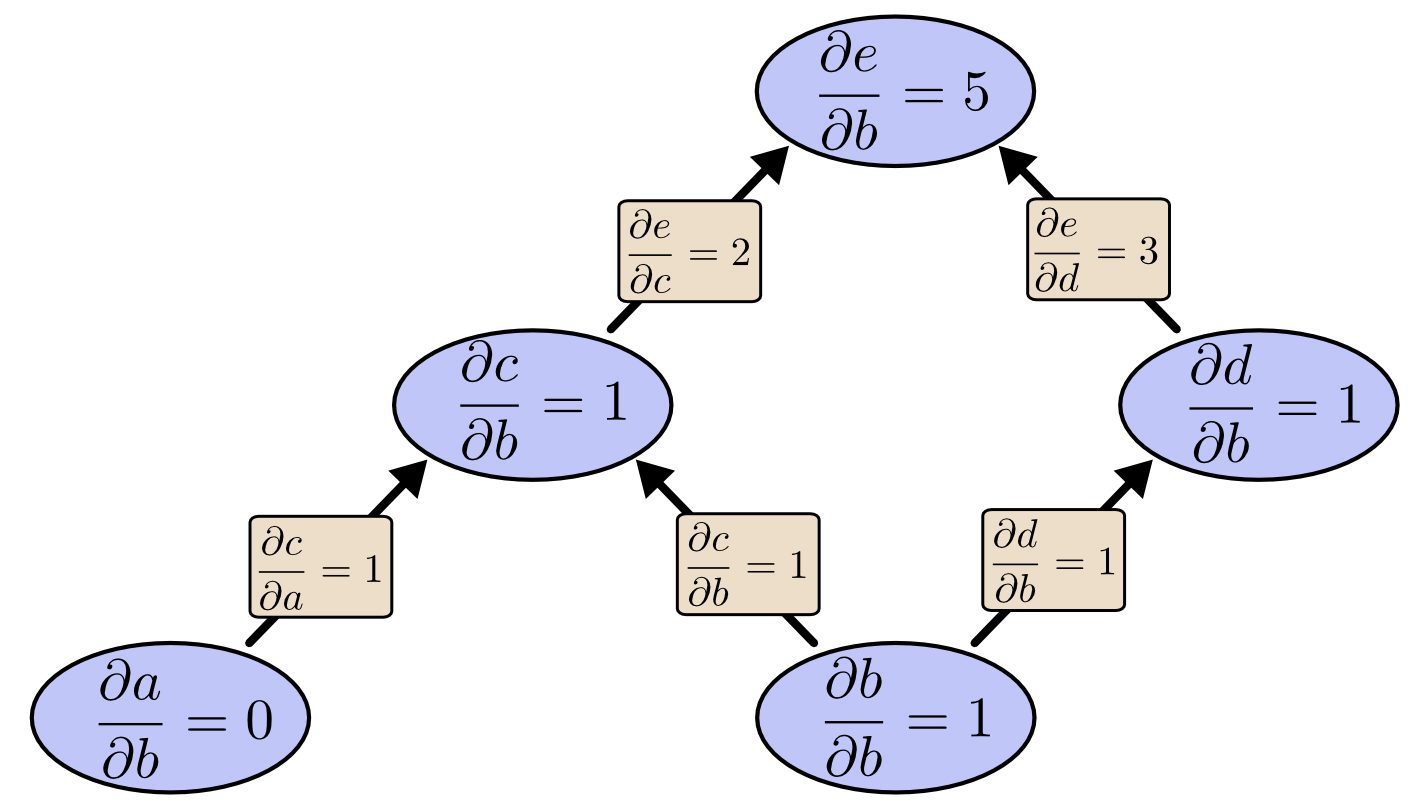

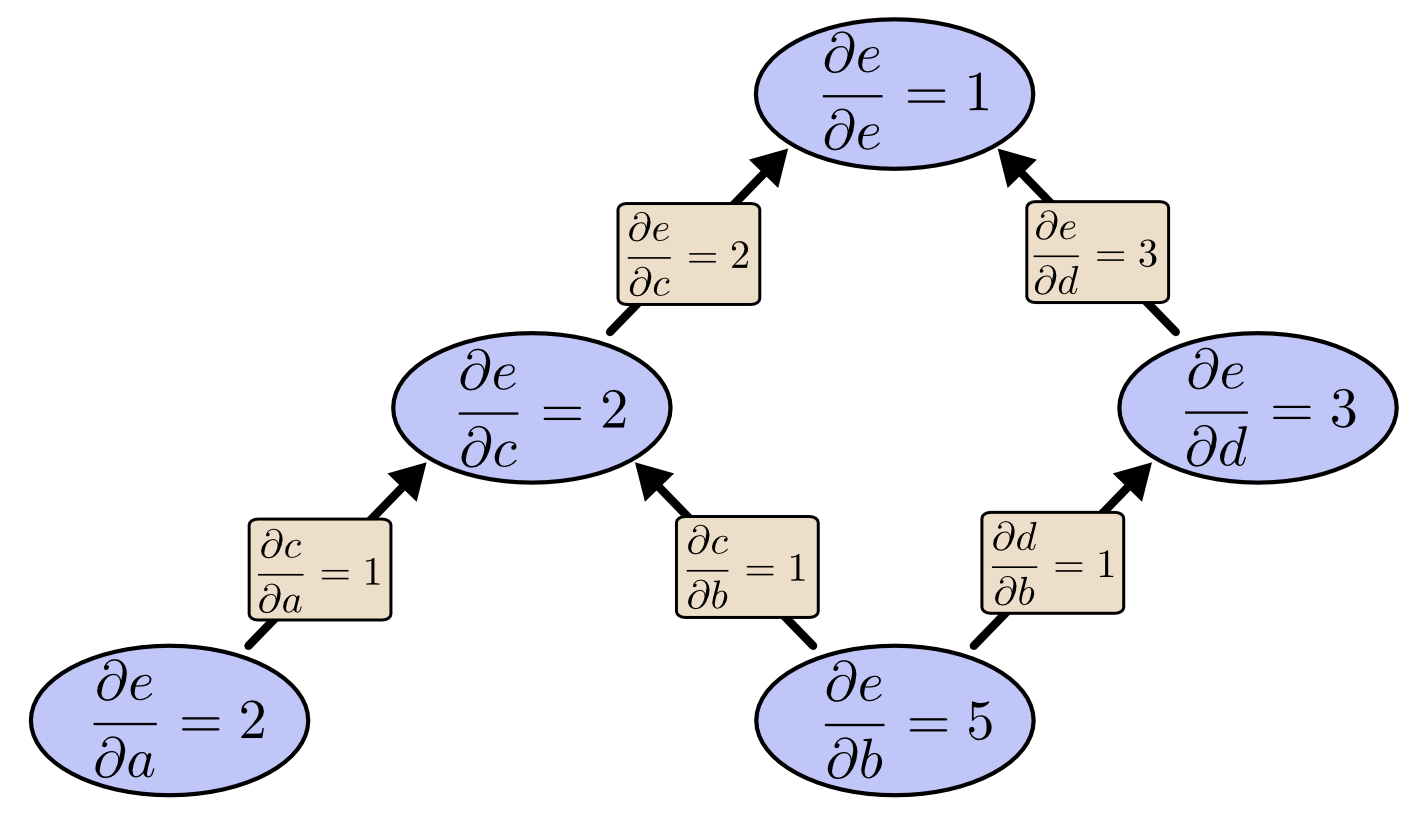

Chain rule: The derivative of a node w.r.t any node is the sum over all paths of the products along the paths!

How to compute this efficiently?

Chain rule: The derivative of a node w.r.t any node is the sum over all paths of the products along the paths!

How to compute this efficiently?

Forward mode: compute derivative of all nodes w.r.t. a fixed one (here $b$)

Forward mode: compute derivative of all nodes w.r.t. a fixed one (here $b$)

Backward mode: compute derivative of a fixed node (here $e$) w.r.t. all nodes

Backward mode: compute derivative of a fixed node (here $e$) w.r.t. all nodes

this trick has many names: in machine learning usually called Backpropagation algorithm

Homework

practice building and running tensorflow graphs

implement Logistic Regression, i.e. learn $w$ by minimizing the logistic loss $$L(w) = \frac{1}{n}\sum_{i=1}^n \log\big(1+\exp(-y_i w^\top x_i)\big)$$ using a) fixed data, and b) data being handed in via placeholders

try different learning rates to find one that convergences faster than $\eta=0.001$

(optional) create a version with analytically computed gradients, compare it speed

hand-in requirement

- upload your code to 2a),2b) with a reasonable $\eta$ to the git server

Things we haven't seen:

1. GPU usage

2. variable scopes / re-using variables by name

3. saving graphs to disk and loading it back

4. saving variable contents to disk and loading it back

5. ...

GPU usage: we didn't see it, because it happens transparently.¶

reset_graph()

# Creates a graph.

a = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[2, 3], name='a')

sess = tf.Session()

# Runs the op.

options = tf.RunOptions(output_partition_graphs=True)

metadata = tf.RunMetadata()

a_val = sess.run(a, options=options, run_metadata=metadata)

# Show **where** the computation happened

print(metadata.partition_graphs)

[node {

name: "a"

op: "Const"

device: "/job:localhost/replica:0/task:0/gpu:0"

attr {

key: "dtype"

value {

type: DT_FLOAT

}

}

attr {

key: "value"

value {

tensor {

dtype: DT_FLOAT

tensor_shape {

dim {

size: 2

}

dim {

size: 3

}

}

tensor_content: "\000\000\200?\000\000\000@\000\000@@\000\000\200@\000\000\240@\000\000\300@"

}

}

}

}

node {

name: "a/_0"

op: "_Send"

input: "a"

device: "/job:localhost/replica:0/task:0/gpu:0"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "client_terminated"

value {

b: false

}

}

attr {

key: "recv_device"

value {

s: "/job:localhost/replica:0/task:0/cpu:0"

}

}

attr {

key: "send_device"

value {

s: "/job:localhost/replica:0/task:0/gpu:0"

}

}

attr {

key: "send_device_incarnation"

value {

i: 1

}

}

attr {

key: "tensor_name"

value {

s: "edge_5_a"

}

}

}

library {

}

versions {

producer: 24

}

, node {

name: "a/_1"

op: "_Recv"

device: "/job:localhost/replica:0/task:0/cpu:0"

attr {

key: "client_terminated"

value {

b: false

}

}

attr {

key: "recv_device"

value {

s: "/job:localhost/replica:0/task:0/cpu:0"

}

}

attr {

key: "send_device"

value {

s: "/job:localhost/replica:0/task:0/gpu:0"

}

}

attr {

key: "send_device_incarnation"

value {

i: 1

}

}

attr {

key: "tensor_name"

value {

s: "edge_5_a"

}

}

attr {

key: "tensor_type"

value {

type: DT_FLOAT

}

}

}

node {

name: "_retval_a_0_0"

op: "_Retval"

input: "a/_1"

device: "/job:localhost/replica:0/task:0/cpu:0"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "index"

value {

i: 0

}

}

}

library {

}

versions {

producer: 24

}

]

---------------------------------------------------¶

2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz (14 core + Hyperthreading)

In [4]: def f():

...: A = np.random.randn(10000,10000).astype(np.float32)

...: Asquared = np.dot(A,A)

...:

...: %time f()

...:

CPU times: user 32.6 s, sys: 1.15 s, total: 33.7 s

Wall time: 33.1 s

In [5]: def f():

...: A = np.random.randn(10000,10000).astype(np.float64)

...: Asquared = np.dot(A,A)

...:

...: %time f()

...:

CPU times: user 1min 8s, sys: 1.68 s, total: 1min 9s

Wall time: 1min 8s

2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz (14 core + Hyperthreading)

In [6]: with tf.device("/cpu:0"):

...: A = tf.random_normal([10000,10000], dtype=tf.float32)

...: Asquared = tf.matmul(A,A, name="A2")

...:

...: with tf.Session() as sess:

...: %time res = sess.run(Asquared)

...:

2017-11-25 12:24:04.899238: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120]...

CPU times: user 3min 3s, sys: 1.24 s, total: 3min 4s

Wall time: 3.45 s

In [7]: with tf.device("/cpu:0"):

...: A = tf.random_normal([10000,10000], dtype=tf.float64)

...: Asquared = tf.matmul(A,A, name="A2")

...:

...: with tf.Session() as sess:

...: %time res = sess.run(Asquared)

...:

2017-11-25 12:24:26.075294: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120]...

CPU times: user 6min 10s, sys: 2.54 s, total: 6min 12s

Wall time: 6.91 s

Tesla P100-PCIE-16GB

In [8]: with tf.device("/gpu:0"):

...: A = tf.random_normal([10000,10000], dtype=tf.float32)

...: Asquared = tf.matmul(A,A, name="A2")

...:

...: with tf.Session() as sess:

...: %time res = sess.run(Asquared)

...:

2017-11-25 12:26:26.685615: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120]...

CPU times: user 236 ms, sys: 140 ms, total: 376 ms

Wall time: 358 ms

In [9]: with tf.device("/gpu:0"):

...: A = tf.random_normal([10000,10000], dtype=tf.float64)

...: Asquared = tf.matmul(A,A, name="A2")

...:

...: with tf.Session() as sess:

...: %time res = sess.run(Asquared)

...:

2017-11-25 12:26:43.021277: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120]...

CPU times: user 72 ms, sys: 568 ms, total: 640 ms

Wall time: 634 ms

2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz (14 core + Hyperthreading)

In [4]: def f():

...: A = np.random.randn(10000,10000).astype(np.float32)

...: Asquared = np.dot(A,A)

...:

...: %time f()

...:

CPU times: user 32.6 s, sys: 1.15 s, total: 33.7 s

Wall time: 33.1 s

In [5]: def f():

...: A = np.random.randn(10000,10000).astype(np.float64)

...: Asquared = np.dot(A,A)

...:

...: %time f()

...:

CPU times: user 1min 8s, sys: 1.68 s, total: 1min 9s

Wall time: 1min 8s

2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz (14 core + Hyperthreading)

In [6]: with tf.device("/cpu:0"):

...: A = tf.random_normal([10000,10000], dtype=tf.float32)

...: Asquared = tf.matmul(A,A, name="A2")

...:

...: with tf.Session() as sess:

...: %time res = sess.run(Asquared)

...:

2017-11-25 12:24:04.899238: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120]...

CPU times: user 3min 3s, sys: 1.24 s, total: 3min 4s

Wall time: 3.45 s

In [7]: with tf.device("/cpu:0"):

...: A = tf.random_normal([10000,10000], dtype=tf.float64)

...: Asquared = tf.matmul(A,A, name="A2")

...:

...: with tf.Session() as sess:

...: %time res = sess.run(Asquared)

...:

2017-11-25 12:24:26.075294: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120]...

CPU times: user 6min 10s, sys: 2.54 s, total: 6min 12s

Wall time: 6.91 s

Tesla P100-PCIE-16GB

In [8]: with tf.device("/gpu:0"):

...: A = tf.random_normal([10000,10000], dtype=tf.float32)

...: Asquared = tf.matmul(A,A, name="A2")

...:

...: with tf.Session() as sess:

...: %time res = sess.run(Asquared)

...:

2017-11-25 12:26:26.685615: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120]...

CPU times: user 236 ms, sys: 140 ms, total: 376 ms

Wall time: 358 ms

In [9]: with tf.device("/gpu:0"):

...: A = tf.random_normal([10000,10000], dtype=tf.float64)

...: Asquared = tf.matmul(A,A, name="A2")

...:

...: with tf.Session() as sess:

...: %time res = sess.run(Asquared)

...:

2017-11-25 12:26:43.021277: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120]...

CPU times: user 72 ms, sys: 568 ms, total: 640 ms

Wall time: 634 ms